Fast Python loops with Cython

Cython is essentially a Python to C translator. Cython allows you to use syntax similar to Python, while achieving speeds near that of C.

This post describes how to use Cython to speed up a single Python function involving ‘tight loops’. I’ll leave more complicated applications - with many functions and classes - for a later post.

Should I use Cython?

If you’re using Python and need performance there are a variety of options, see quantecon for a detailed comparison. And of course you could always choose a different language like Julia, or be brave and learn C itself.

While the static compilation approach of Cython may not be cutting edge, Cython is mature, well documented and capable of handling large complicated projects. Cython code lies behind many of the big Python scientific libraries including scikit-learn and pandas.

The example

Our example function evaluates a Radial Basis Function (RBF) approximation scheme. We assume each data point is a ‘center’ and use Gaussian type RBFs

so our function takes an input data array \( X\) of shape (N, D), a parameter array \( \beta\) of length N and a ‘bandwidth’ parameter \(\theta\) and return an array of fitted values \( \hat Y \) of length N.

Python loops

Here’s the naive Python implementation

from math import exp

import numpy as np

def rbf_network(X, beta, theta):

N = X.shape[0]

D = X.shape[1]

Y = np.zeros(N)

for i in range(N):

for j in range(N):

r = 0

for d in range(D):

r += (X[j, d] - X[i, d]) ** 2

r = r**0.5

Y[i] += beta[j] * exp(-(r * theta)**2)

return YLet’s make up some data

import numpy as np

D = 5

N = 1000

X = np.array([np.random.rand(N) for d in range(D)]).T

beta = np.random.rand(N)

theta = 10Timing this in IPython we get

%timeit rbf_network(X, beta, theta)

1 loops, best of 3: 11.5 s per loopDam those Python loops are slow!

scipy.interpolate.Rbf

So in this case we’re lucky and there’s an external numpy based implementation

from scipy.interpolate import Rbf

rbf = Rbf(X[:,0], X[:,1], X[:,2], X[:,3], X[:, 4], np.random.rand(N))

Xtuple = tuple([X[:, i] for i in range(D)])

%timeit rbf(Xtuple)

1 loops, best of 3: 637 ms per loopMuch better. But what if we want to go faster or we don’t have a library we can use.

Cython

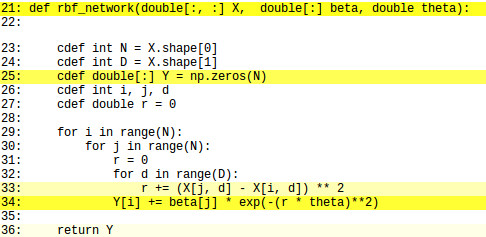

A Cython version - implemented in the file fastloop.pyx - looks something like this

from math import exp

import numpy as np

def rbf_network(double[:, :] X, double[:] beta, double theta):

cdef int N = X.shape[0]

cdef int D = X.shape[1]

cdef double[:] Y = np.zeros(N)

cdef int i, j, d

cdef double r = 0

for i in range(N):

for j in range(N):

r = 0

for d in range(D):

r += (X[j, d] - X[i, d]) ** 2

r = r**0.5

Y[i] += beta[j] * exp(-(r * theta)**2)

return YSo far all we’ve done is add some type declarations. For local variables we use the cdef keyword. For arrays we use ‘memoryviews’ which can accept numpy arrays as input.

Note that you don’t have to add type declarations in a *.pyx file. Any lines which use untyped variables will just remain in Python rather than being translated to C.

To compile we need a setup.py script, that looks something like this

from distutils.core import setup

from Cython.Build import cythonize

setup(name="fastloop", ext_modules=cythonize('fastloop.pyx'),)then we compile from the terminal with

python setup.py build_ext --inplacewhich generates a C code file fastloop.c and a compiled Python extension fastloop.so.

Lets test it out

from fastloop import rbf_network

%timeit rbf_network(X, beta, theta)

10 loops, best of 3: 209 ms per loopOK, but we can do a better. With Cython there are a few ‘tricks’ involved in achieving good performance. Here’s the first one, if we type this in the terminal

cython fastloop.pyx -awe generate a fastloop.html file which we can open in a browser

Lines highlighted yellow are still using Python and are slowing our code down. Our goal is get rid of yellow lines, especially any inside of loops.

Out first problem is that we’re still using the Python exponential function. We need to replace this with the C version. The main functions from math.h are included in the Cython libc library, so we just replace from math import exp with

from libc.math cimport exp Next we need to add some compiler directives, the easiest way is to add this line to the top of the file

#cython: boundscheck=False, wraparound=False, nonecheck=FalseNote that with these checks turned off you can get segmentation faults rather than nice error messages, so its best to debug your code before putting this line in.

Next we can consider playing with compiler flags (these are C tricks rather than Cython tricks as such). When using gcc the most important option seems to be -ffast-math. From my limited experience, this can improve speeds a lot, with no noticeable loss of reliability. To implement these changes we need to modify our setup.py file

from distutils.core import setup

from distutils.extension import Extension

from Cython.Distutils import build_ext

ext_modules=[ Extension("fastloop",

["fastloop.pyx"],

libraries=["m"],

extra_compile_args = ["-ffast-math"])]

setup(

name = "fastloop",

cmdclass = {"build_ext": build_ext},

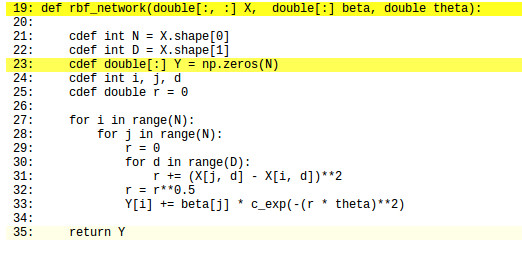

ext_modules = ext_modules)Now if we run cython fastloop.pyx -a again we will see the loops are now free of Python

The yellow outside the loops is irrelevant here (but would matter if we needed to call this function many times within another loop).

Now if we recompile and test it out we get

from fastloop import rbf_network

%timeit rbf_network(X, beta, theta)

10 loops, best of 3: 42.2 ms per loopOK, now we’re getting there.

Calling C functions

So what else can we do? Well it turns out the exponential function is a bit of a bottleneck here, even the C version. One option is to use a fast approximation to the exponential function

#include <math.h>

static union

{

double d;

struct

{

#ifdef LITTLE_ENDIAN

int j, i;

#else

int i, j;

#endif

} n;

} _eco;

#define EXP_A (1048576/M_LN2) /* use 1512775 for integer version */

#define EXP_C 60801 /* see text for choice of c values */

#define EXP(y) (_eco.n.i = EXP_A*(y) + (1072693248 - EXP_C), _eco.d)From Cython its easy to call C code. Put the above code in vfastexp.h, then just add the following to our fastloop.pyx file

cdef extern from "vfastexp.h":

double exp_approx "EXP" (double)So now we can just use exp_approx() in place of exp(). This gives us

from fastloop import rbf_network

%timeit rbf_network(X, beta, theta)

100 loops, best of 3: 15.3 ms per loopThe Wash-up

| Method | Time (ms) | Speed up |

|---|---|---|

| Python loops | 11500 | - |

scipy.interpolate.Rbf |

637 | 17 |

| Cython | 42 | 272 |

| Cython with approximation | 15 | 751 |

So there are a few tricks to learn, but once your on top of them Cython is fast and easy to use. Maybe not as easy as Python, but certainly much better than learning C.